Supercharge growth with Batch CSV automation

*Visit the Batch CSV docs for more product information. Need to chat? Talk with our data experts about Batch CSV.

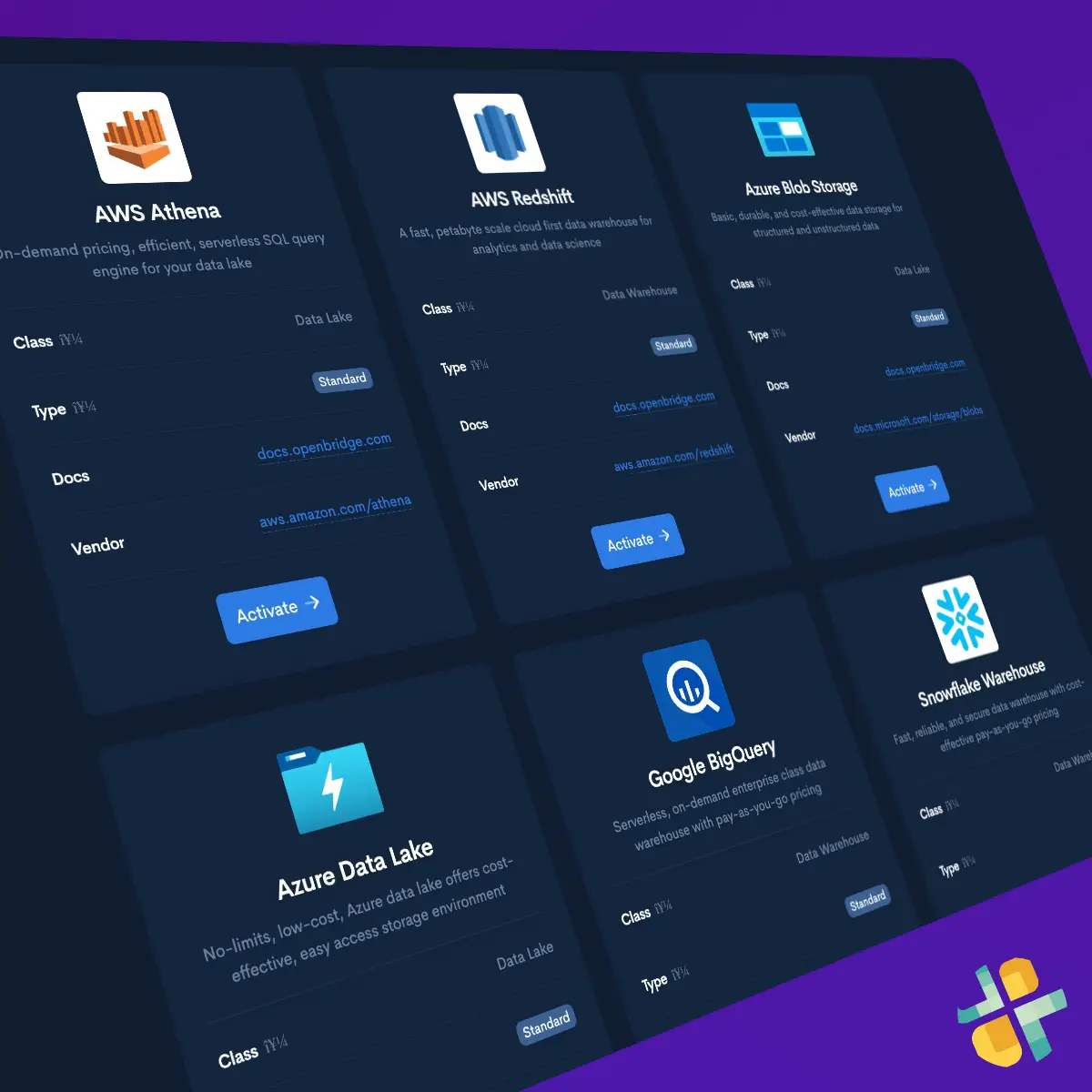

Create Data Destination

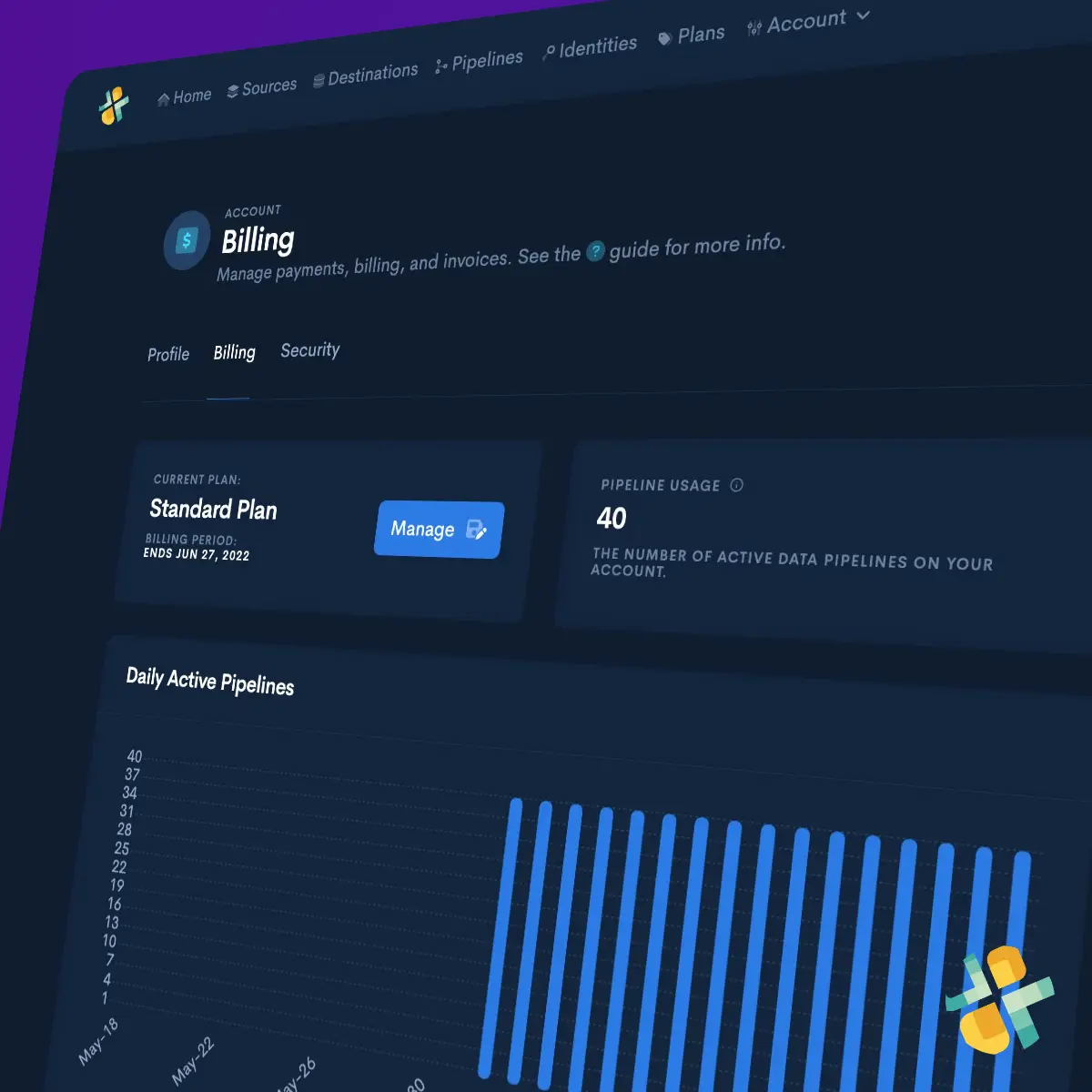

You can quickly create a private, trusted destination to store Batch CSV data using cloud data warehouses or data lakes like Databricks, Amazon Redshift, Amazon Redshift Spectrum, Google BigQuery, Snowflake, Azure Data Lake, and Amazon Athena.

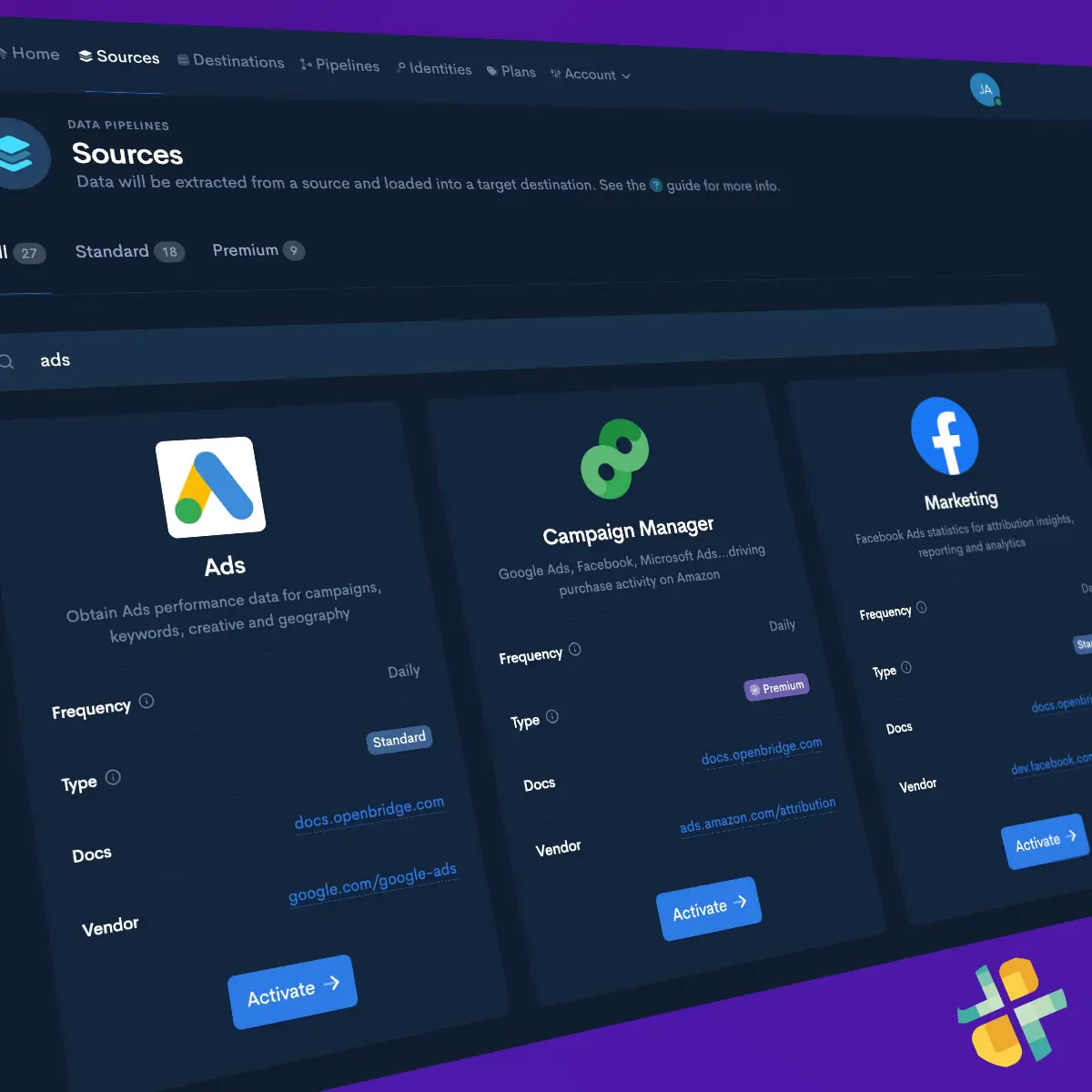

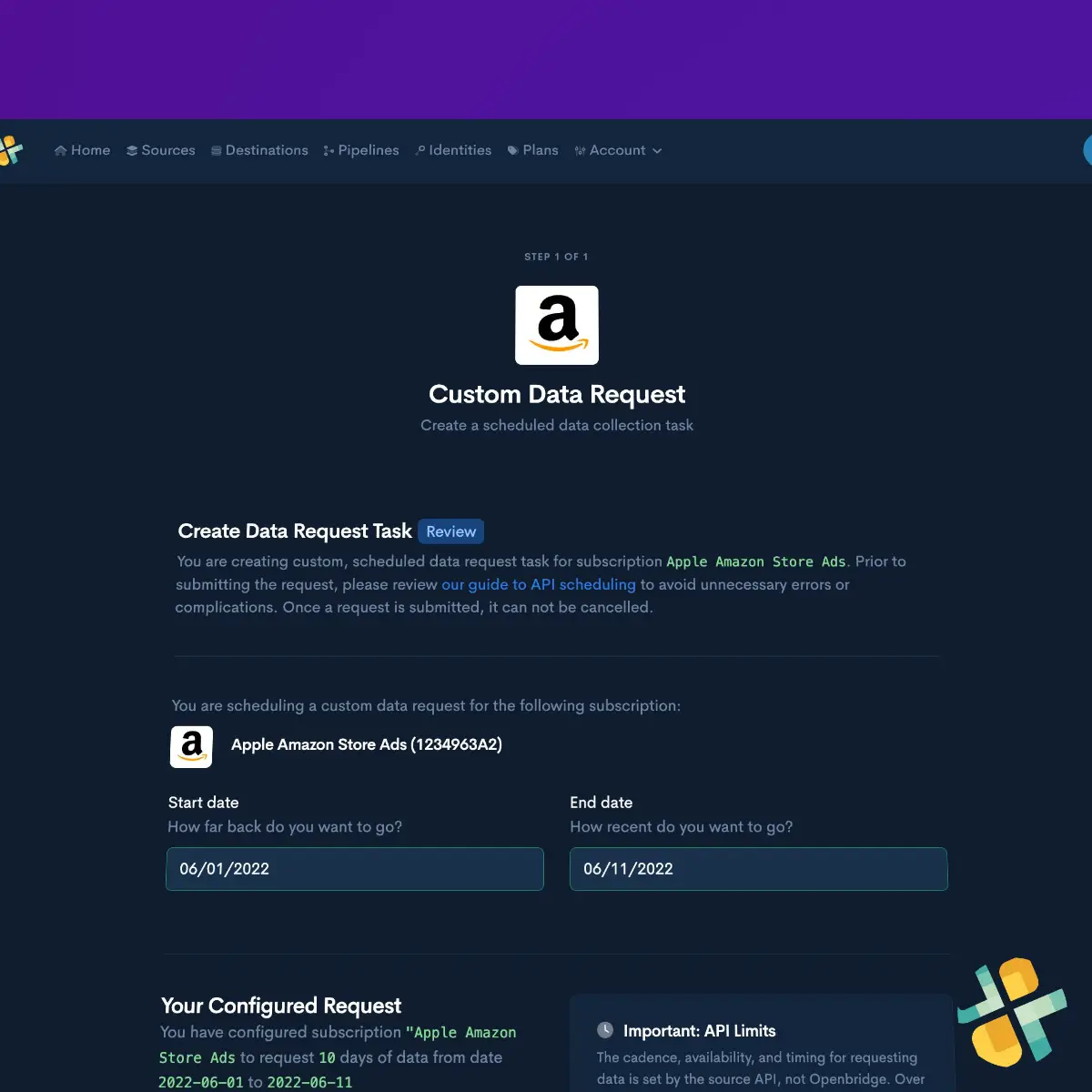

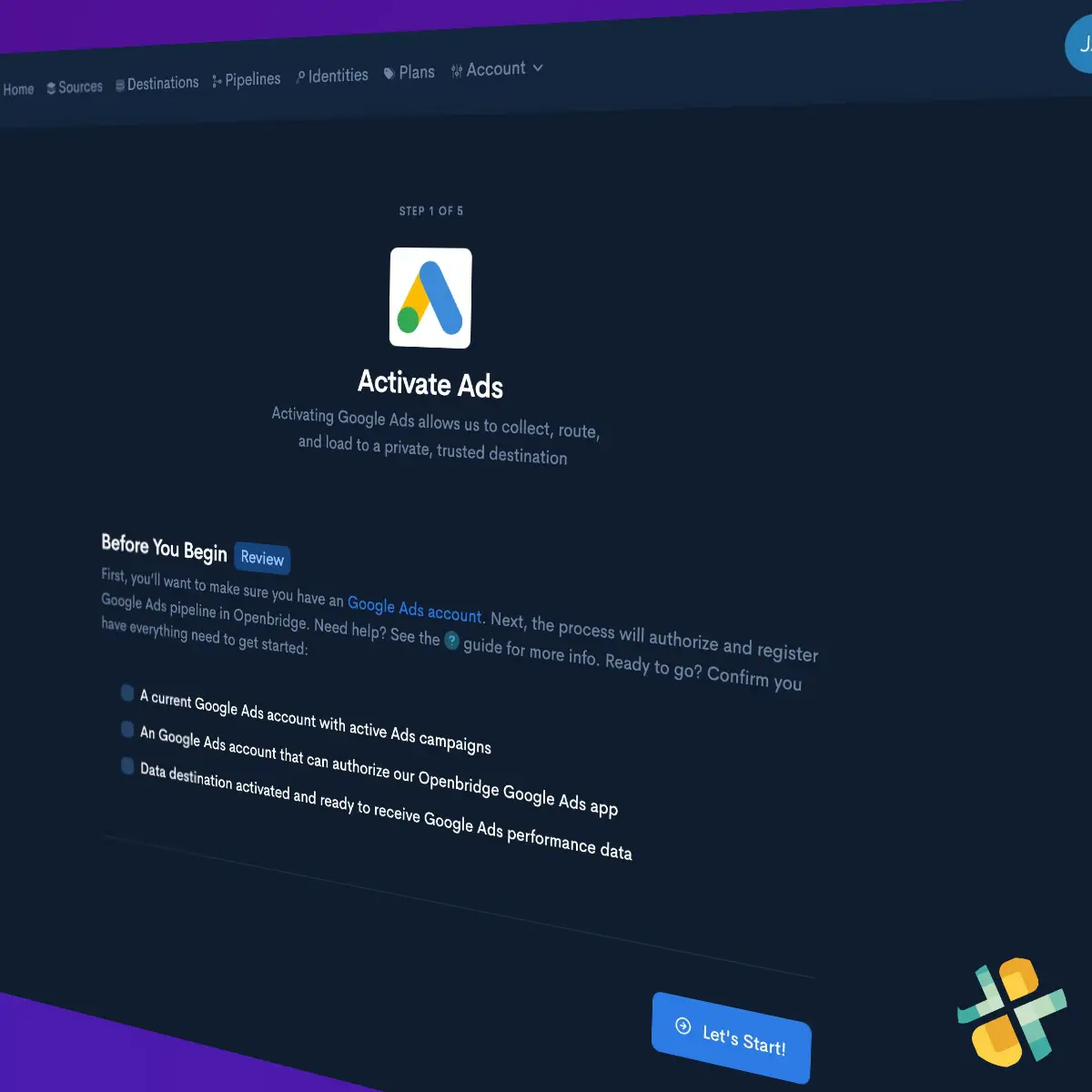

See data destinationsActivate Data Pipeline

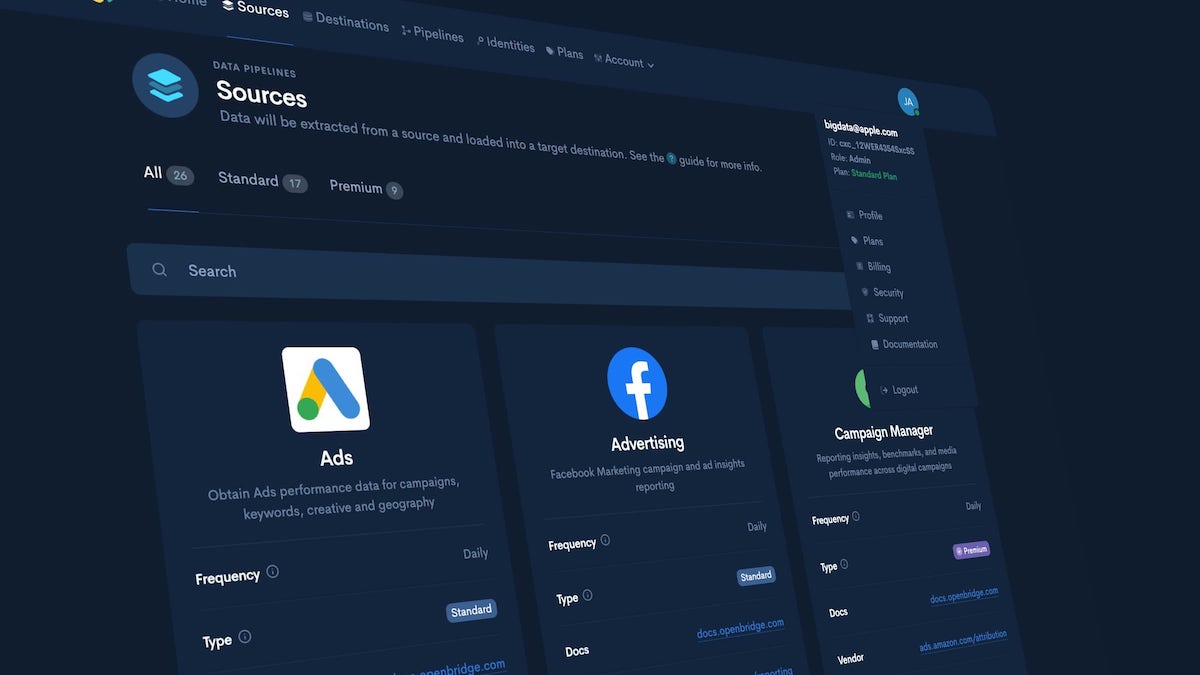

Leveraging Batch CSV certified APIs , quickly and securely activate code-free, fully-automated data pipelines to a private, trusted data destination owned by you.

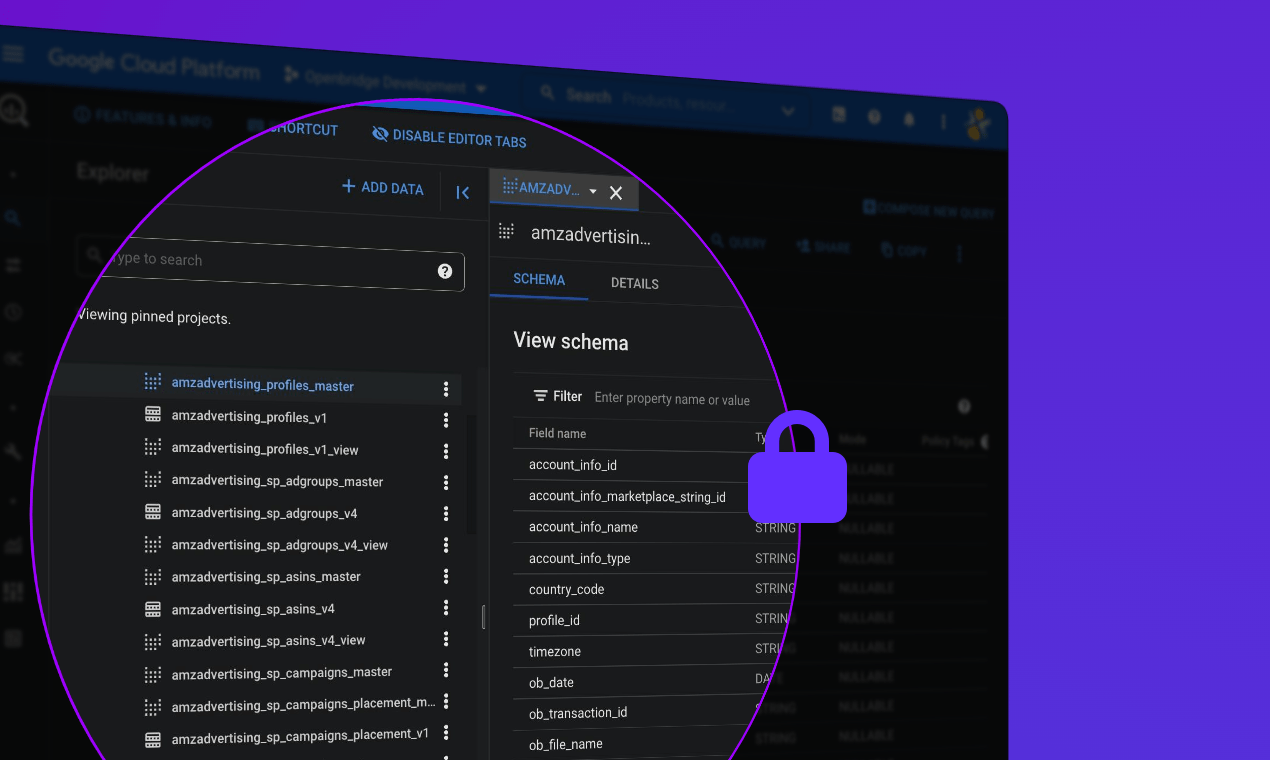

See integrationsExplore Your Data

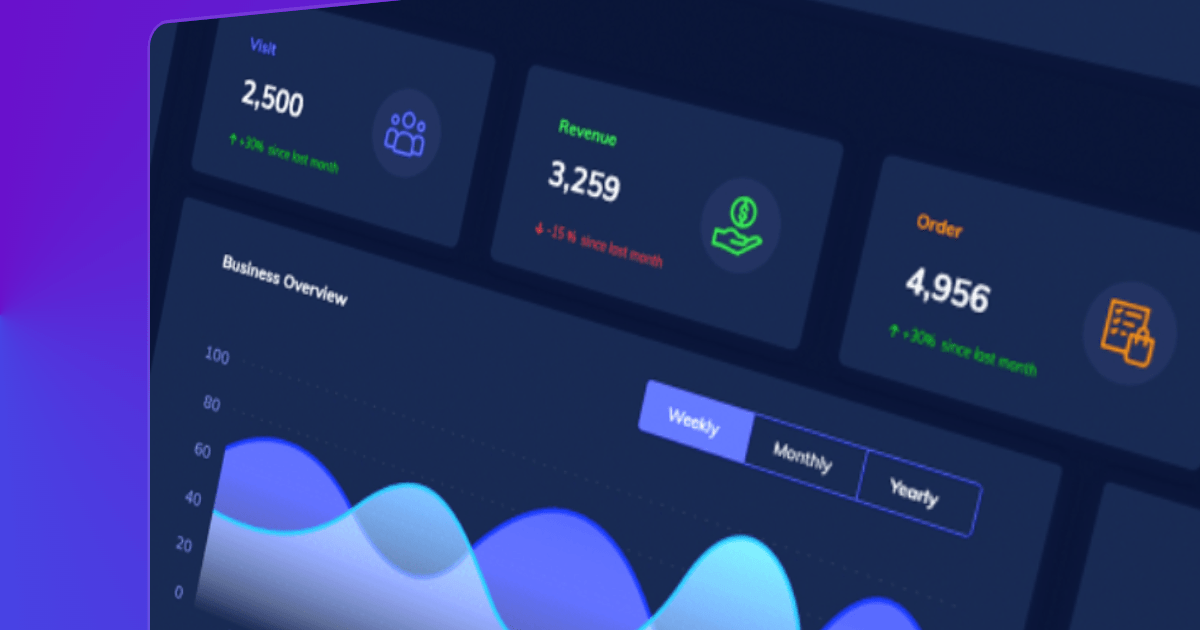

Unlocked Batch CSV data turbocharges the data tools you love to use like Tableau, Microsoft Power BI, Looker, Amazon QuickSight, Looker Studio, and many others with reliable, integrated data automation.

See data tools