Industry Leading Technology Partners

Break down data silos, unify your customer touch points with our code-free, fully-automated pipelines.

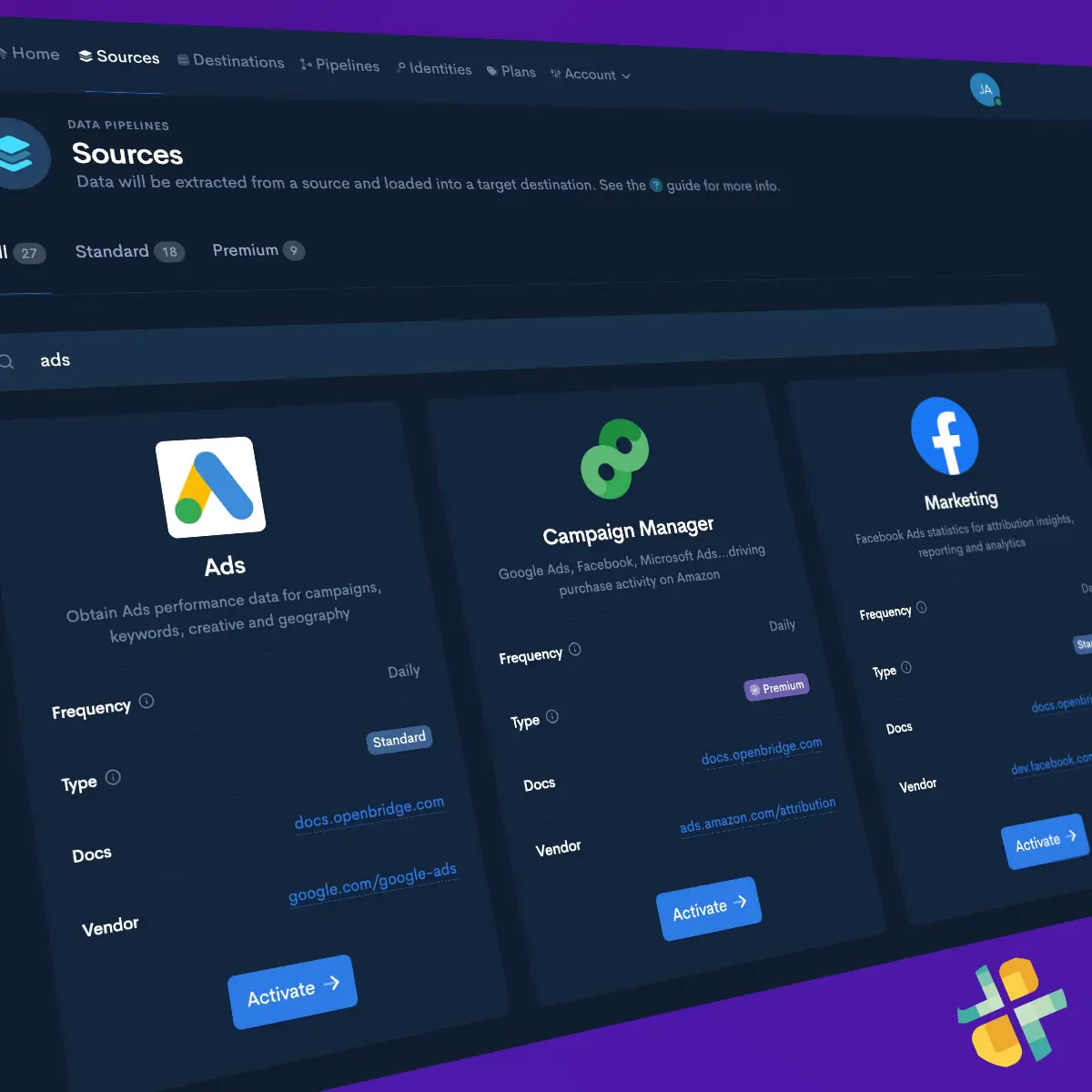

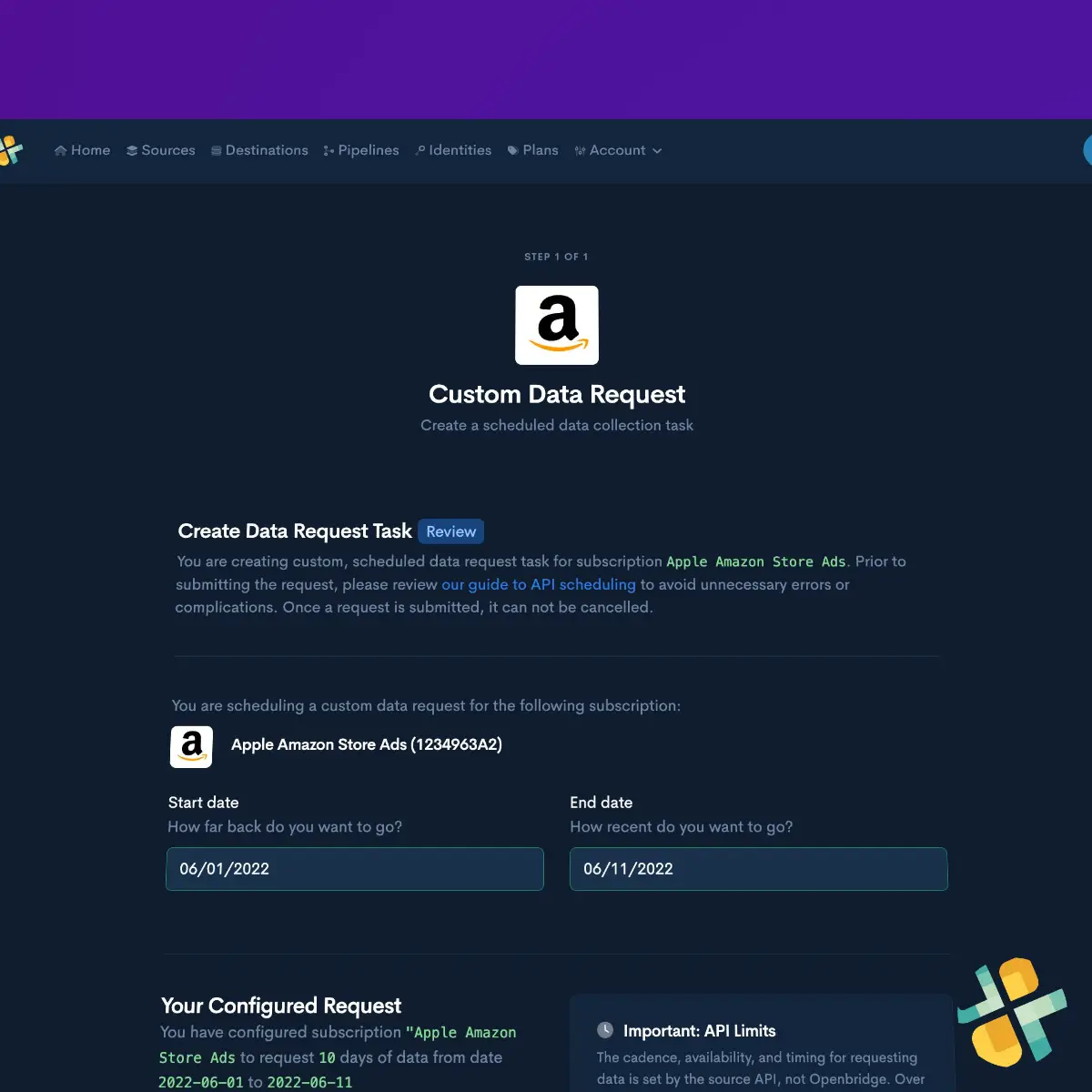

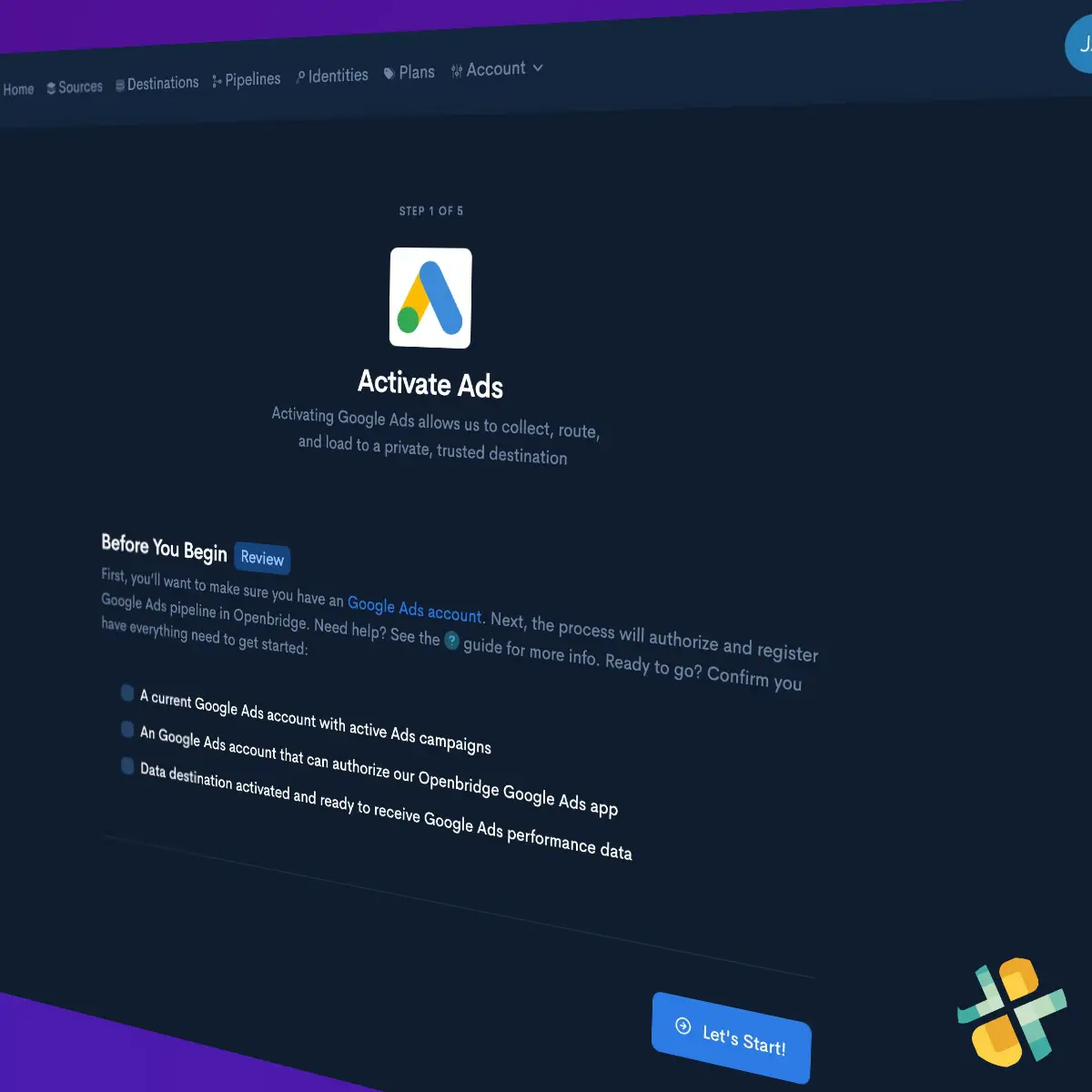

Simply select, collect, and unify data to a private destination.

No manual downloads. Select, connect, and load your data.

Ready-to-go registered, tested, and approved program applications.

Code-free automation for all the ways data can unlock performance and growth.

Automate and unify data to increase productivity for all the ways you want to work.

Break down data silos, unify your customer touch points with our code-free, fully-automated pipelines to a trusted data destination owned by you.

Turn data into actionable opportunities with Microsoft Power BI data visualization and reporting tools.

Looker Studio unlocks the power of your data with interactive and beautiful reports for smarter decisions.

Looker BI platform that helps you explore, analyze and share real-time business analytics easily.

A leader in visual analytics for business intelligence. See and understand any data with Tableau.

Collaborative data platform from Mode Analytics combines SQL, R, Python, and visual analytics in one place.

Azure Data Factory service allows teams to compose storage, movement, and processing into automated data workflows.

Business intelligence software from Grow unlocks insights you desperately need to fuel business growth.

Domo lets you turn data into live visualizations, and extend BI into apps that empower your team with data.

Unlock hidden insights in your data with Alteryx. Simplify and unify analytics, turning data into breakthroughs.

Sisense is an industry leader in analytics - easily prepare, analyze & explore data from multiple data destinations.

Azure Machine Learning simplifies the way with team design, deploy, and manage models as a cloud service.

Cost effective Google Dataproc is a fast, easy-to-use, fully-managed cloud service for Apache Spark and Hadoop.

Open-source dbt is a transformation tool for analysts and engineers to transform, test and document data.

Self-service Tableau Prep allows teams to quickly and confidently combine, shape, and clean data for analysis.

BI and analytics platform from MicroStrategy helps teams with cloud-based, intelligent insights solutions.

Google Dataprep is an intelligent cloud service to explore, clean, and prepare data for analysis or machine learning.

Cloud platform offers reporting, data visualization, and improved business intelligence with SAP Analytics.

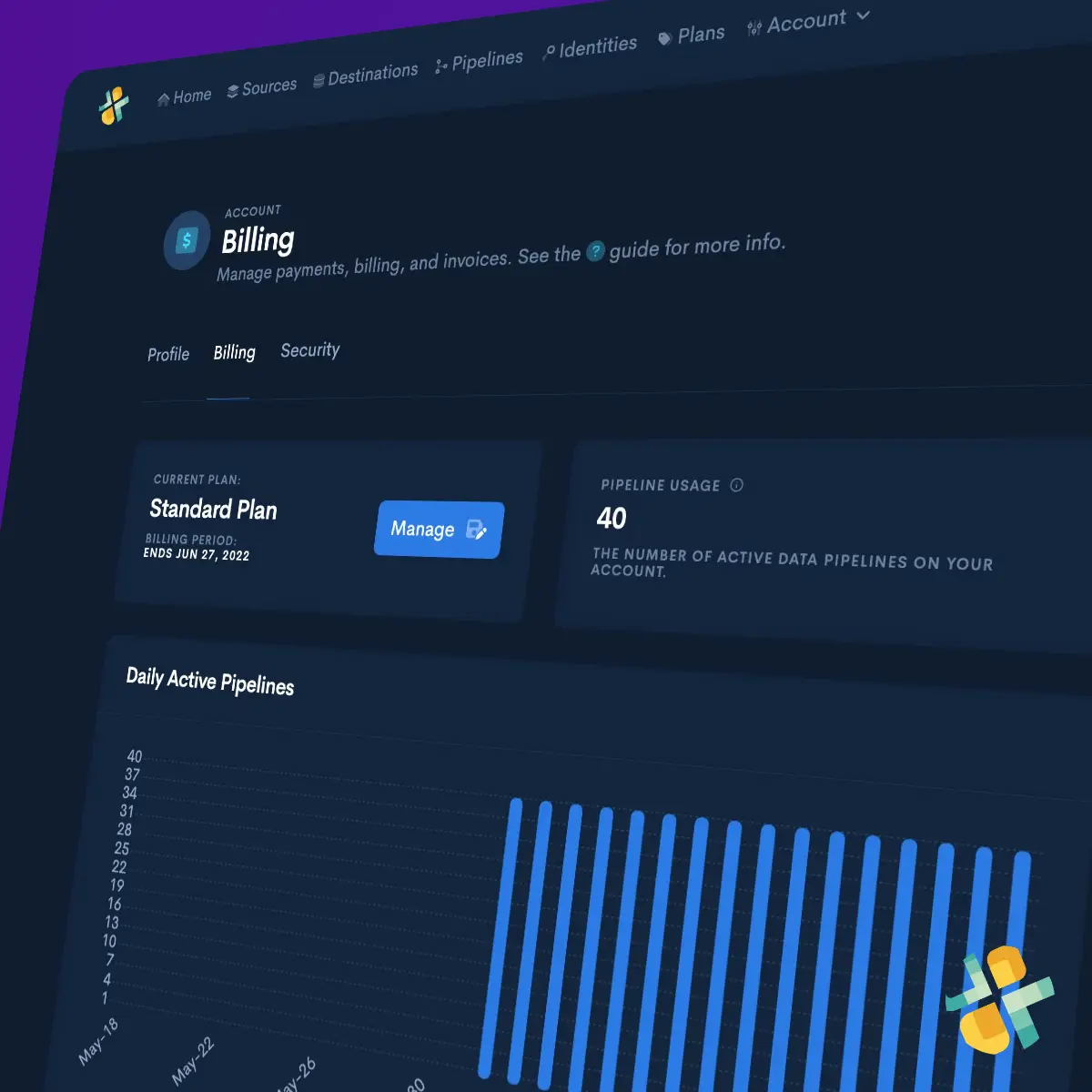

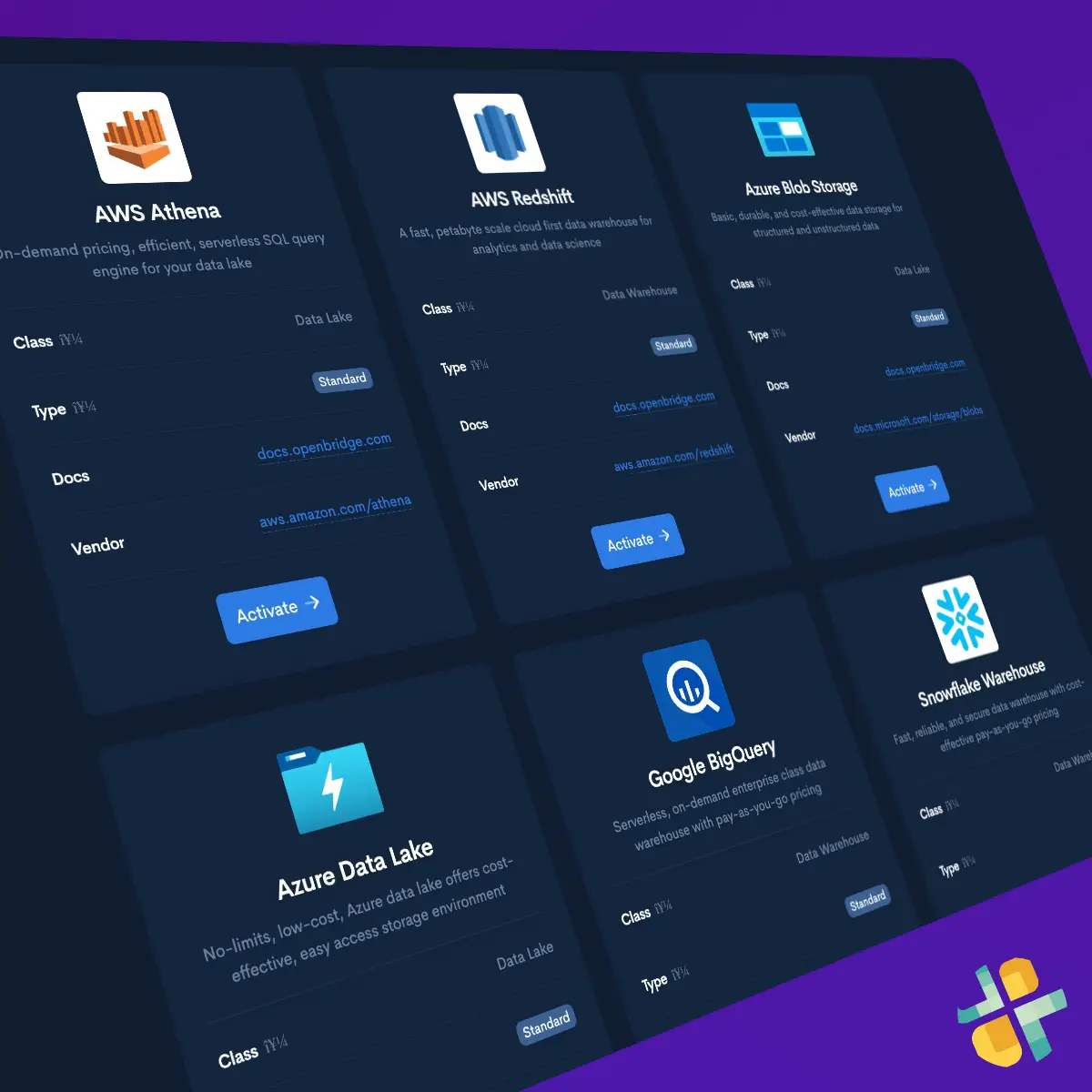

Code-free, automated pipelines process, route, and load to industry leading data lakes or warehouses owned by you.

Amazon Athena is serverless, so there is no infrastructure, and you pay only for the queries you run.

Run analytics, Power BI, Data Factory, and many others securely in the Azure Data Lake.

Databricks is a data lakehouse platform that lets you run all your SQL and BI applications at scale.

Amazon S3 Data Lake securely and reliably scales to support the most demanding workflows.

Amazon Redshift Spectrum queries data from your data lake without having to load the data into Redshift.

"Access to data means the team can work smarter. End-to-end marketing and advertising campaign data in unified data lake ensures we can optimize sales based on learnings from our measurement efforts"

Our customers retain complete control and ownership over their data. Data is always stored in a private, trusted data lake, data lakehouse, or cloud warehouse in a region of their choosing, ensuring they have the information to fuel the tools their teams love to use.

Get started using automated, code-free data ingestion with a 30-day trial of Openbridge, free of charge.

Start Now30-day free trial • Quick setup • No credit card, no charge, no risk